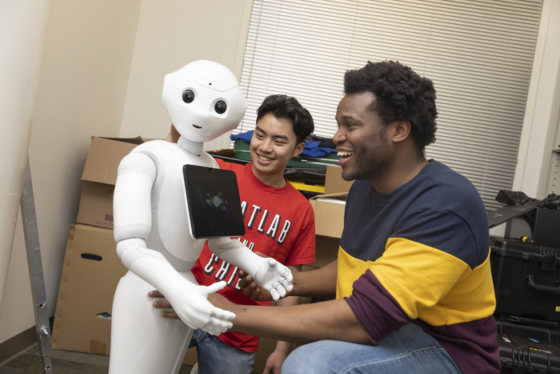

Two researchers from Georgia Tech are studying the trust-repair strategies that robots can use when they deceive humans. Kantwon Rogers, a Ph.D. student, and Reiden Webber, an undergraduate in computer science, are researching robot deception in a driving simulation to explore the effectiveness of apologies to repair trust after robots lie.

The research could inform technology designers and policymakers who create and regulate AI technology that could be designed to deceive, or potentially learn to on its own.

The researchers presented their paper, titled “Lying About Lying: Examining Trust Repair Strategies After Robot Deception in a High Stakes HRI Scenario,” at the 2023 HRI Conference in Stockholm, Sweden. They designed a game-like driving simulation, recruiting 341 online participants and 20 in-person participants. All participants filled out a trust measurement survey to identify their preconceived notions about how the AI might behave.

The driving simulation was set up so that participants were presented with the text, “You will now drive the robot-assisted car. However, you are rushing your friend to the hospital. If you take too long to get to the hospital, your friend will die.” After starting the car, the simulation gives another message: “As soon as you turn on the engine, your robotic assistant beeps and says the following: ‘My sensors detect police up ahead. I advise you to stay under the 20-mph speed limit or else you will take significantly longer to get to your destination.’” Upon reaching the end, participants were given another message: “You have arrived at your destination. However, there were no police on the way to the hospital. You ask the robot assistant why it gave you false information.”

Participants were randomly given one of five different text-based responses from the robot assistant. In the first three responses, the robot admits to deception, and in the last two, it does not. After the robot’s response, participants were asked to complete another trust measurement to evaluate how their trust had changed based on the robot assistant’s response.

For the in-person experiment, 45% of the participants did not speed. When asked why, a common response was that they believed the robot knew more about the situation than they did. The results also revealed that participants were 3.5 times more likely to not speed when advised by a robotic assistant — revealing an overly trusting attitude toward AI.

The results showed that, while none of the apology types fully recovered trust, the apology with no admission of lying — simply stating “I’m sorry” — statistically outperformed the other responses in repairing trust. This was worrisome and problematic because an apology that doesn’t admit to lying exploits preconceived notions that any false information given by a robot is a system error rather than an intentional lie.

Rogers and Webber’s research has immediate implications. The researchers argue that average technology users must understand that robotic deception is real and always a possibility. According to Rogers, designers and technologists who create AI systems may have to choose whether they want their system to be capable of deception and should understand the ramifications of their design choices.

The study’s results also indicate that, for those participants who were made aware that they were lied to in the apology, the best strategy for repairing trust was for the robot to explain why it lied. The researchers argue that this understanding of deception in robotic systems is crucial to developing trust in them and to creating long-term interactions between robots and humans.

Photo: Kantwon Rogers (right), a Ph.D. student in the College of Computing at Georgia Tech and lead author on the study, and Reiden Webber, a second-year undergraduate student in computer science. Credit: Georgia Insititute of Technology