Columbia University’s Creative Machines Lab has developed a robot named Emo, designed to mimic human facial expressions. The project, led by Hod Lipson and PhD student Yuhang Hu, was detailed in a publication in the journal Science Robotics.

Emo is a human-like robotic head with a face equipped with 26 actuators, enabling a broad range of facial expressions. The head is covered in soft silicone skin and features high-resolution cameras in the eyes for making eye contact, a key aspect of nonverbal communication.

The development team trained Emo using two AI models: one predicts human facial expressions by analyzing changes in the target face, and the other generates motor commands for corresponding facial expressions. Emo was trained by observing human facial expressions in videos and learning the relationship between facial expressions and motor commands. This process, termed “self modeling,” is similar to how humans practice and recognize facial expressions.

The study suggests that Emo can predict and replicate a human’s smile 840 milliseconds before the person actually smiles, creating a sense of simultaneous expression. This capability of Emo is expected to enhance the quality of human-robot interaction and build trust between humans and robots.

Future plans include integrating verbal communication capabilities using large language models like ChatGPT. Hod Lipson, a professor at Columbia Engineering and a member of the Data Science Institute, acknowledges the ethical considerations of such advanced technology in robotics, emphasizing the importance of responsible development and use.

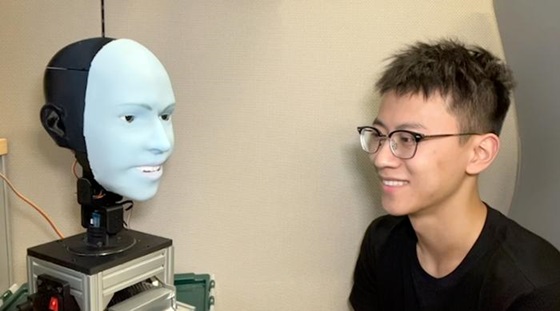

Photo: Yuhang Hu of Creative Machines Lab face-to-face with Emo. Credit: Creative Machines Lab/Columbia Engineering